Abstract

We present lilGym,* a new benchmark for language-conditioned reinforcement learning in visual environments. lilGym is based on 2,661 highly-compositional human-written natural language statements grounded in an interactive visual environment.

We annotate all statements with executable Python programs representing their meaning to enable exact reward computation in every possible world state. Each statement is paired with multiple start states and reward functions to form thousands of distinct Markov Decision Processes of varying difficulty. We experiment with lilGym with different models and learning regimes. Our results and analysis show that while existing methods are able to achieve non-trivial performance, lilGym forms a challenging open problem. lilGym is available at https://github.com/lil-lab/lilgym.

*lilGym stands for Language, Interaction, and Learning Gym.

Examples

Tower-Scratch

Tower-FlipIt

Scatter-Scratch

Scatter-FlipIt

Annotation examples

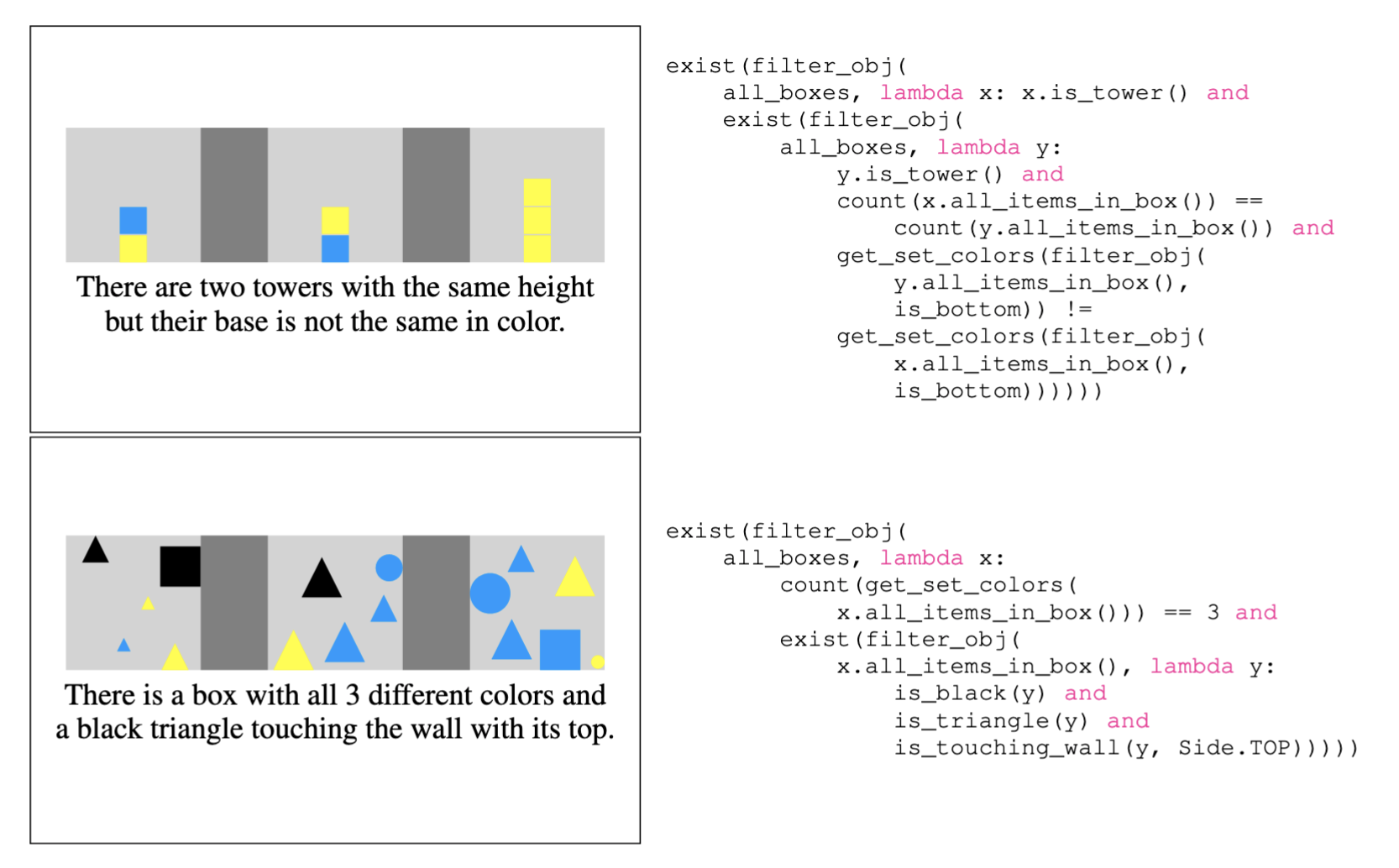

Example sentences with the example images displayed alongside them during annotation (left), and their annotated Python program representation (right). Both sentences and logical forms are True for the corresponding image.

BibTeX

@inproceedings{wu-etal-2023-lilgym,

title = "lil{G}ym: Natural Language Visual Reasoning with Reinforcement Learning",

author = "Wu, Anne and

Brantley, Kiante and

Kojima, Noriyuki and

Artzi, Yoav",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

year = "2023",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.512",

pages = "9214--9234",

}